This is a discussion of a file compressor and a file decompressor in tinyC. It was mentioned in 2010 on the not just tiny-C programming group. The origin of this goes back to something called A Poor Person's Spelling Checker (Dr. Dobb's 1982) which used compressed dictionary files.

Here's a link to the original article : http://primepuzzle.com/ppspell/pps.html

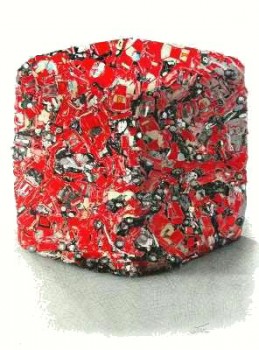

Baldaccini, Ceasar (1921-1998) - Compressed Ferrari (lithograph)

Files handled by the programs are "dictionary files" consisting of single words that use only the 26 capital letters followed by carriage return linefeed delimiters or just linefeed delimiters. The compression algorithm is based on the following scheme:

Letters are divided into two classes, "common" and "rare." Here are the 15 "common" characters.

\nstands for the newline (aka linefeed) character.

EISNATROLDCUGP\n

Here are the twelve "rare" characters.

MHBYFVWKZXQJ

The first byte of a compressed file is a "signature byte" of

FFIf the file to be compressed contains the following

A ABLE ABORT ... DOERS ZCPR HERO REDUCE BADDEST UNIVERSE BLINDING CON PESKY MICE

the compressed file would look like so:

FF 4E 4F 28 0E 4F 27 65 E4 F2 76 50 9E 4F 27 65 13 CE 4F 27 65 2E 4F 27 B5 E4 F2 7F 50 E4 F2 27 F4 61 CC 13 87 75 8F 3E 4F 22 78 B5 0E 4F 22 78 B5 08 F3 E4 F2 25 64 A5 E4 AA 08 06 45 76 E4 AA 0D 5E 4A A0 D5 4F 28 0E 4A A0 22 E4 AA 02 20 9E 4A A0 22 1F 28 0E 4A A7 F0 F0 79 45 0E 4A A7 F0 D8 12 F1 E4 AA 76 91 3C E4 AA 7B 35 E4 AA BF 0B 84 50 E4 AA B6 45 0E 4A F1 10 F5 09 E4 AF 73 7F 68 09 C0 E4 A6 72 2E 4A 51 F5 45 09 E4 A5 B4 8E 4A 5B 48 8F 3E 49 4F 0E 49 4D 5E 49 9E 49 90 9E ... 61 F5 78 7B 2E 43 37 F3 13 CE F5 06 F2 14 C0 ED B6 D7 65 13 CE 80 C1 51 F0 45 0E F2 0A 7F 01 3C E9 1F 4F 41 AB 85 EA B8 8E C0 F0 2E F2 04 61 3C E1 90 35 1F 4F 31 3C EF 67 6F 0F 41 CF 15 06 E4 F4 F4 81 A5 E9 70 62 EF 8A D6 EF 10 67 E6 09 BA 0E F2 49 90 25 EB 31 F5 06 20 EF 28 13 91 3C EA 73 ED 02 F7 F3 EF 01 A0 EF CCExplanation:

Byte 0 is a "signature" byte.

Byte 1 has two hexadecimal nibbles (a "nibble" is 4 "bits.") The 4 is because A is the 4th character in the "common" characters (we index using a base of 0). The E is because there's a newline after the "leading word" "A" in our input file and E is hexadecimal for decimal 14 and newline is the 14th character in the "common" characters (again, we index using a base of 0).

Byte 2 has two hexadecimal nibbles. The 4 is because A is the 4th character in the "common" characters. The F signals that we are have a "rare" character.

Byte 3 has two hexadecimal nibbles. The 2 is because B is the 2nd character in the "rare" characters. The 8 is because the L is the 8th "common character."

This compression method thus requires only one nibble for "common," two nibbles (i.e. one byte) for "rare" characters. File size is cut roughly in half by this relatively simple method. Since input files have "common" and "rare" characters at random spots, the signal you are entering "rare" mode might occur as a leading nibble or a trailing nibble.

End of file details:

If you find you have an unwritten nibble at the end of the compression, construct a full byte to write by using an

Ffor the right nibble. Put this byte. Then put a hexadecimal

CCas the last byte. If you don't have an unwritten nibble at the end, just put a hexadecimal

FCas the last byte in your compressed file.

A solution to the above is at

Here is a compressed file: http://primepuzzle.com/lee-bradley/test_now.lcx

To decompress it (in Linux), use the command:

./tc SamplePrograms/dompress.tc -r 'dompress"test_now.lcx","test_now.lex"'